In the last part, we got route leaking in tenant VRFs working, in this part, we will look at giving the VRFs a way out of the Fabric!

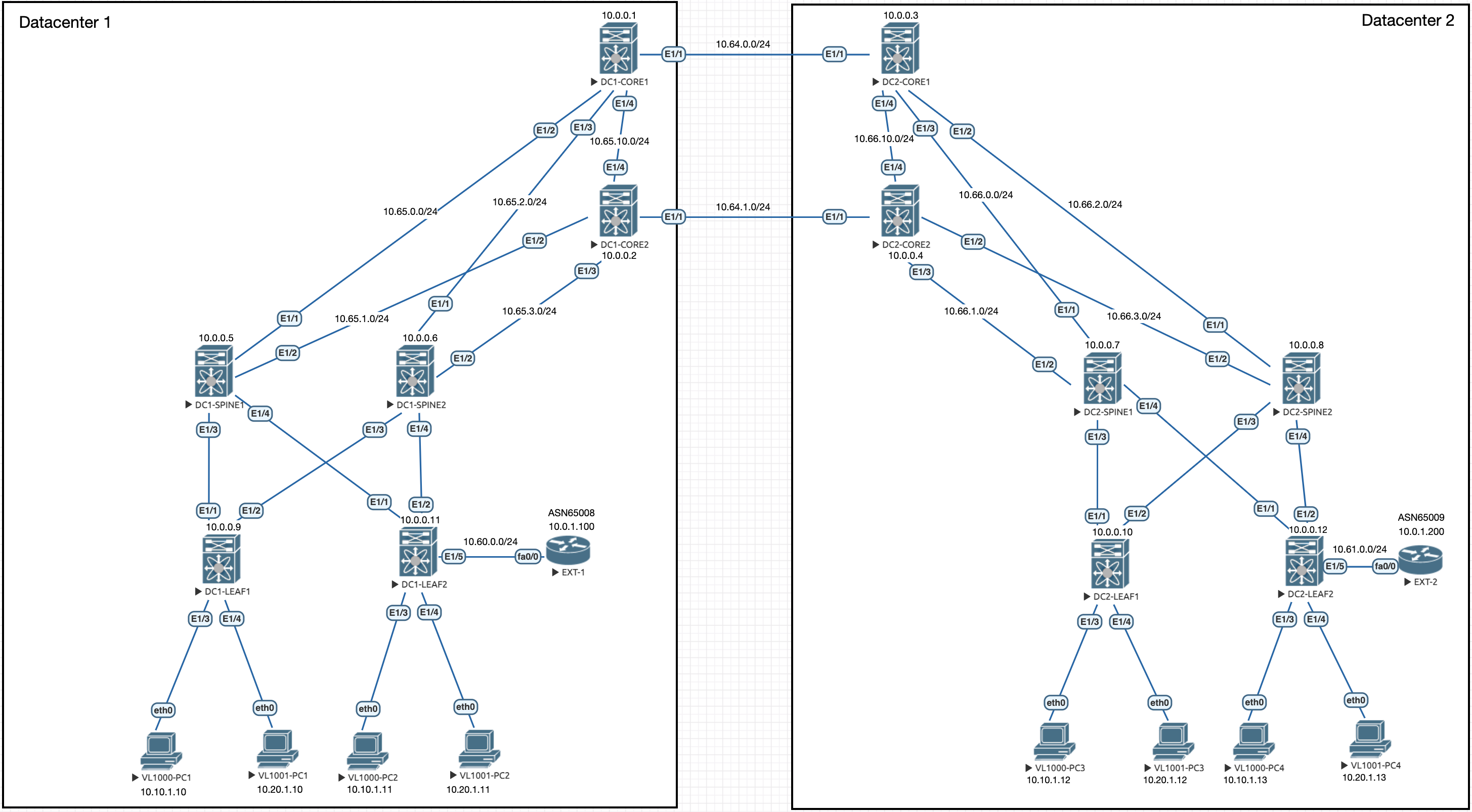

We have added some new devices to the topology:

We can see the EXT routers have moved to be connected to the border nodes we chose in the last guide. These routers have some loopbacks on them which are to be shared for both tenants we have created:

EXT-1:

- Loopback0: 172.16.0.254/24

- Loopback1: 172.16.1.254/24

EXT-2:

- Loopback2: 172.16.2.254/24

- Loopback3: 172.16.3.254/24

The routers will terminate into a shared VRF on the border nodes and the EXT routers will have a BGP peering into thie VRF.

Base VRF Configuration

So, we only need to create the shared VRF and leak the routes on DC1-LEAF2 and DC2-LEAF2:

vrf context external-shared

vni 911111

rd auto

address-family ipv4 unicast

route-target both auto

route-target both auto evpnThis is a base configuration and we will complete the leaking at the end to show the full process.

Before we continue, lets quickly go over what this config should do. It creates a new VRF called external-shared and assigns a VNI (required later). Within the address family, we auto assign a RT, which will be 100:911111 in DC1 and 200:911111 in DC2.

L3VNI Setup

In addition to the VRF, we need to configure it as another L3VNI for the fabric. To do this, we need to create a vlan, SVI and then add the vni to the nve logical interface.

This config needs to be done on both border leaves:

vlan 911

vn-segment 911111

interface Vlan911

no shutdown

vrf member external-shared

ip forward

interface nve1

member vni 911111 associate-vrfThis is all the config we need for this part.

BGP Peering between EXT and LEAF

Now we need to configure the peerings between the EXT routers and the border leaves. Lets do DC1 first:

DC1-LEAF2:

interface Ethernet1/5

vrf member external-shared

ip address 10.60.0.1/24

no shutdown

router bgp 100

vrf external-shared

address-family ipv4 unicast

neighbor 10.60.0.2

remote-as 65008

address-family ipv4 unicastThe BGP config on EXT-1:

router bgp 65008

bgp log-neighbor-changes

no bgp default ipv4-unicast

neighbor 10.60.0.1 remote-as 100

address-family ipv4

redistribute connected

neighbor 10.60.0.1 activate

exit-address-familyDC2-LEAF2:

interface Ethernet1/5

vrf member external-shared

ip address 10.61.0.1/24

no shutdown

router bgp 200

vrf external-shared

address-family ipv4 unicast

neighbor 10.61.0.2

remote-as 65009

address-family ipv4 unicastThe BGP config on EXT-2:

router bgp 65009

bgp log-neighbor-changes

no bgp default ipv4-unicast

neighbor 10.61.0.1 remote-as 100

address-family ipv4

redistribute connected

neighbor 10.61.0.1 activate

exit-address-familyBecase we are redistributing connected networks, we should see some BGP routes on the Border leaves:

DC1-LEAF2# show bgp vrf external-shared ipv4 unicast

BGP routing table information for VRF external-shared, address family IPv4 Unicast

BGP table version is 7, Local Router ID is 10.60.0.1

Status: s-suppressed, x-deleted, S-stale, d-dampened, h-history, *-valid, >-best

Path type: i-internal, e-external, c-confed, l-local, a-aggregate, r-redist, I-injected

Origin codes: i - IGP, e - EGP, ? - incomplete, | - multipath, & - backup, 2 - best2

Network Next Hop Metric LocPrf Weight Path

*>e10.0.1.100/32 10.60.0.2 0 0 65008 ?

*>e10.60.0.0/24 10.60.0.2 0 0 65008 ?

*>e172.16.0.0/24 10.60.0.2 0 0 65008 ?

*>e172.16.1.0/24 10.60.0.2 0 0 65008 ?DC2-LEAF1# show bgp vrf external-shared ipv4 unicast

BGP routing table information for VRF external-shared, address family IPv4 Unicast

BGP table version is 7, Local Router ID is 10.61.0.1

Status: s-suppressed, x-deleted, S-stale, d-dampened, h-history, *-valid, >-best

Path type: i-internal, e-external, c-confed, l-local, a-aggregate, r-redist, I-injected

Origin codes: i - IGP, e - EGP, ? - incomplete, | - multipath, & - backup, 2 - best2

Network Next Hop Metric LocPrf Weight Path

*>e10.0.1.200/32 10.61.0.2 0 0 65009 ?

*>e10.61.0.0/24 10.61.0.2 0 0 65009 ?

*>e172.16.2.0/24 10.61.0.2 0 0 65009 ?

*>e172.16.3.0/24 10.61.0.2 0 0 65009 ?This is a good sign. Now we need to complete the final leaking.

VRF Leaking

Now we need to leak the routes from the external-shared vrf into the tenant VRFs and then the other way around so we have a reverse path.

Lets look at DC1 first:

vrf context external-shared

address-family ipv4 unicast

route-target import 100:900101

route-target import 100:900101 evpn

route-target import 100:900102

route-target import 100:900102 evpn

vrf context overlay-900101

address-family ipv4 unicast

route-target import 100:911111

vrf context overlay-900102

address-family ipv4 unicast

route-target import 100:911111and DC2:

vrf context external-shared

address-family ipv4 unicast

route-target import 200:900101

route-target import 200:900101 evpn

route-target import 200:900102

route-target import 200:900102 evpn

vrf context overlay-900101

address-family ipv4 unicast

route-target import 200:911111

vrf context overlay-900102

address-family ipv4 unicast

route-target import 200:911111Lets just explain what we are doing there. So for DC1, we are first importing the RTs from the two tenant VRFs into the external-shared VRF and note we are using the evpn keyword at the end because we need the IPv4 prefixes and all the individual /32 IPs for all the end hosts in order to direct the traffic properly. Then we go into each tenant VRF and import the RT from the external-shared VRF in order to learn the external prefixes within the fabric. The exact same is being done with DC2. You probably want to do some BGP filtering for those /32 networks.

Lets see what this has done:

DC1-LEAF2# show bgp vrf overlay-900101 ipv4 unicast | beg Network

Network Next Hop Metric LocPrf Weight Path

* l0.0.0.0/0 0.0.0.0 100 32768 i

*>l 0.0.0.0 100 32768 i

*>e10.0.1.100/32 10.60.0.2 0 0 65008 ?

* i10.10.1.0/24 10.0.0.9 100 0 i

*>l 0.0.0.0 100 32768 i

*>i10.10.1.10/32 10.0.0.9 100 0 i

*>i10.10.1.12/32 10.111.111.1 2000 100 0 200 i

* i10.20.1.0/24 10.0.0.9 100 0 i

*>l 0.0.0.0 100 32768 i

*>i10.20.1.10/32 10.0.0.9 100 0 i

*>i10.20.1.12/32 10.111.111.1 2000 100 0 200 i

*>e10.60.0.0/24 10.60.0.2 0 0 65008 ?

*>e172.16.0.0/24 10.60.0.2 0 0 65008 ?

*>e172.16.1.0/24 10.60.0.2 0 0 65008 ?This is the BGP table for the external-shared VRF on DC1's border node. We can see the 172.16 networks at the bottom that we expect. However, we can also see the /24 subnets for the two L3VNIs in each tenant and we are seeing /32 routes. These will be present on the EXT router too, hence talking about filtering.

Speaking of the EXT router, lets check the BGP table on EXT-1:

EXT-1#show ip bgp | begin Network

Network Next Hop Metric LocPrf Weight Path

*> 0.0.0.0 10.60.0.1 0 100 i

*> 10.0.1.100/32 0.0.0.0 0 32768 ?

*> 10.10.1.0/24 10.60.0.1 0 100 i

*> 10.10.1.10/32 10.60.0.1 0 100 i

*> 10.10.1.12/32 10.60.0.1 0 100 200 i

*> 10.20.1.0/24 10.60.0.1 0 100 i

*> 10.20.1.10/32 10.60.0.1 0 100 i

*> 10.20.1.12/32 10.60.0.1 0 100 200 i

*> 10.60.0.0/24 0.0.0.0 0 32768 ?

*> 172.16.0.0/24 0.0.0.0 0 32768 ?

*> 172.16.1.0/24 0.0.0.0 0 32768 ?We can see all the networks we expect here. Including a suspicious looking default route that we probably also want to filter out because it's unlikely we want a default route pointing back into the fabric from the outside. Its normally the other way around!

Lets do some initial testing while we are on EXT-1:

EXT-1#ping 10.10.1.10

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.10.1.10, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 12/16/20 ms

EXT-1#ping 10.20.1.10

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.20.1.10, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 12/28/64 ms

EXT-1#ping 10.10.1.11

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.10.1.11, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 8/13/28 ms

EXT-1#ping 10.20.1.11

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.20.1.11, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 1/14/52 ms

EXT-1#From the above output, we can see that EXT-1 can communicate with hosts with in the local DC. Lets try EXT-2 too:

EXT-2#ping 10.10.1.12

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.10.1.12, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 12/20/44 ms

EXT-2#ping 10.20.1.12

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.20.1.12, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 4/14/20 ms

EXT-2#ping 10.10.1.13

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.10.1.13, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 8/9/12 ms

EXT-2#ping 10.20.1.13

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.20.1.13, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 8/12/20 msThats working within the local DC. Empahisis on the LOCAL part of that. Lets try from EXT-1 trying to ping VL1000-PC3 which is in the other DC:

EXT-1#ping 10.10.1.12

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.10.1.12, timeout is 2 seconds:

.....

Success rate is 0 percent (0/5)You may be able to tell why this doesn't work, if not, lets run through it.

When you leak or advertise a route into a VRF, it will not be advertised into the vpnv4 BGP process unless there is a specific command used to allow this. At the moment, the networks advertised by the EXT routers are local to the border leaves. However, we have default routes don't we?! Indeed, however, these are working against us at the moment. Think about it, traffic will get to VL1000-PC3 from EXT-1, but when the traffic comes back, it will follow the local default route and be routed towards the DC2 border leaf, this isn't what we want!

Lets show that on the CORE switches, we don't know about the 172.16 networks:

DC1-CORE1# show bgp vpnv4 unicast

BGP routing table information for VRF default, address family VPNv4 Unicast

BGP table version is 1095, Local Router ID is 10.0.0.1

Status: s-suppressed, x-deleted, S-stale, d-dampened, h-history, *-valid, >-best

Path type: i-internal, e-external, c-confed, l-local, a-aggregate, r-redist, I-i

njected

Origin codes: i - IGP, e - EGP, ? - incomplete, | - multipath, & - backup, 2 - b

est2

Network Next Hop Metric LocPrf Weight Path

Route Distinguisher: 10.0.0.1:4 (VRF overlay-900101)

*>i0.0.0.0/0 10.0.0.11 100 0 i

* e 10.222.222.1 2000 0 200 i

* i10.10.1.0/24 10.0.0.11 100 0 i

*>i 10.0.0.9 100 0 i

* e 10.222.222.1 2000 0 200 i

*>i10.10.1.10/32 10.0.0.9 100 0 i

*>i10.10.1.11/32 10.0.0.11 100 0 i

* i10.10.1.12/32 10.111.111.2 2000 100 0 200 i

*>e 10.222.222.1 2000 0 200 i

* i10.10.1.13/32 10.111.111.2 2000 100 0 200 i

*>e 10.222.222.1 2000 0 200 i

Route Distinguisher: 10.0.0.1:5 (VRF overlay-900102)

*>i0.0.0.0/0 10.0.0.11 100 0 i

* e 10.222.222.1 2000 0 200 i

* i10.20.1.0/24 10.0.0.11 100 0 i

*>i 10.0.0.9 100 0 i

* e 10.222.222.1 2000 0 200 i

*>i10.20.1.10/32 10.0.0.9 100 0 i

*>i10.20.1.11/32 10.0.0.11 100 0 i

* i10.20.1.12/32 10.111.111.2 2000 100 0 200 i

*>e 10.222.222.1 2000 0 200 i

* i10.20.1.13/32 10.111.111.2 2000 100 0 200 i

*>e 10.222.222.1 2000 0 200 iThis proves what we just said.

Now, lets fix it!

Allowing the VPN Routes to propogate

The following should be applied to both border leaves:

vrf context overlay-900101

address-family ipv4 unicast

import vrf advertise-vpn

vrf context overlay-900102

address-family ipv4 unicast

import vrf advertise-vpnThe command basically configures the VRF to allow routes that are imported from another VRF to be exported to a BGP VPN, which here is our internal fabric.

We should see the 172.16 routes propogated on the CORE switches now:

DC1-CORE1# show bgp vpnv4 unicast

BGP routing table information for VRF default, address family VPNv4 Unicast

BGP table version is 10395, Local Router ID is 10.0.0.1

Status: s-suppressed, x-deleted, S-stale, d-dampened, h-history, *-valid, >-best

Path type: i-internal, e-external, c-confed, l-local, a-aggregate, r-redist, I-injected

Origin codes: i - IGP, e - EGP, ? - incomplete, | - multipath, & - backup, 2 - best2

Network Next Hop Metric LocPrf Weight Path

Route Distinguisher: 10.0.0.1:4 (VRF overlay-900101)

*>i0.0.0.0/0 10.0.0.11 100 0 i

* e 10.222.222.1 2000 0 200 i

*>i10.0.1.100/32 10.0.0.11 0 100 0 65008 ?

* i10.0.1.200/32 10.111.111.2 1 100 0 200 65009 ?

*>e 10.222.222.1 1 0 200 65009 ?

* i10.10.1.0/24 10.0.0.11 100 0 i

*>i 10.0.0.9 100 0 i

* e 10.222.222.1 2000 0 200 i

*>i10.10.1.10/32 10.0.0.9 100 0 i

*>i10.10.1.11/32 10.0.0.11 100 0 i

* i10.10.1.12/32 10.111.111.2 2000 100 0 200 i

*>e 10.222.222.1 2000 0 200 i

* i10.10.1.13/32 10.111.111.2 2000 100 0 200 i

*>e 10.222.222.1 2000 0 200 i

*>i10.20.1.0/24 10.0.0.11 100 0 i

* e 10.222.222.1 2000 0 200 i

*>i10.60.0.0/24 10.0.0.11 0 100 0 65008 ?

* i10.61.0.0/24 10.111.111.2 1 100 0 200 65009 ?

*>e 10.222.222.1 1 0 200 65009 ?

*>i172.16.0.0/24 10.0.0.11 0 100 0 65008 ?

*>i172.16.1.0/24 10.0.0.11 0 100 0 65008 ?

* i172.16.2.0/24 10.111.111.2 1 100 0 200 65009 ?

*>e 10.222.222.1 1 0 200 65009 ?

* i172.16.3.0/24 10.111.111.2 1 100 0 200 65009 ?

*>e 10.222.222.1 1 0 200 65009 ?

Route Distinguisher: 10.0.0.1:5 (VRF overlay-900102)

*>i0.0.0.0/0 10.0.0.11 100 0 i

* e 10.222.222.1 2000 0 200 i

*>i10.0.1.100/32 10.0.0.11 0 100 0 65008 ?

* i10.0.1.200/32 10.111.111.2 1 100 0 200 65009 ?

*>e 10.222.222.1 1 0 200 65009 ?

*>i10.10.1.0/24 10.0.0.11 100 0 i

* e 10.222.222.1 2000 0 200 i

* i10.20.1.0/24 10.0.0.11 100 0 i

*>i 10.0.0.9 100 0 i

* e 10.222.222.1 2000 0 200 i

*>i10.20.1.10/32 10.0.0.9 100 0 i

*>i10.20.1.11/32 10.0.0.11 100 0 i

* i10.20.1.12/32 10.111.111.2 2000 100 0 200 i

*>e 10.222.222.1 2000 0 200 i

* i10.20.1.13/32 10.111.111.2 2000 100 0 200 i

*>e 10.222.222.1 2000 0 200 i

*>i10.60.0.0/24 10.0.0.11 0 100 0 65008 ?

* i10.61.0.0/24 10.111.111.2 1 100 0 200 65009 ?

*>e 10.222.222.1 1 0 200 65009 ?

*>i172.16.0.0/24 10.0.0.11 0 100 0 65008 ?

*>i172.16.1.0/24 10.0.0.11 0 100 0 65008 ?

* i172.16.2.0/24 10.111.111.2 1 100 0 200 65009 ?

*>e 10.222.222.1 1 0 200 65009 ?

* i172.16.3.0/24 10.111.111.2 1 100 0 200 65009 ?

*>e 10.222.222.1 1 0 200 65009 ?We can see the 172.16 networks here. Make note that there are two paths for each from DC1-CORE1. This is because that Core switch is also peered with Core2 in DC1. So the prefered path is direct over the DCI to DC2 and then the secondary path is via the iBGP peering to DC1-CORE2, meaning we have resiliency!

Lets try that ping again from EXT-1 to VL1000-PC3:

EXT-1#ping 10.10.1.12

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.10.1.12, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 80/120/140 msSuccess! We have connectivy all the way into DC2.

Finally, as a bonus, lets do some route cleanup.

Bonus: BGP Route Cleanup

At this point, the EXT router BGP sessions will be cluttered with prefixes they don't need, lets check:

EXT-1#show bgp ipv4 unicast | beg Network

Network Next Hop Metric LocPrf Weight Path

*> 0.0.0.0 10.60.0.1 0 100 i

*> 10.0.1.100/32 0.0.0.0 0 32768 ?

*> 10.0.1.200/32 10.60.0.1 0 100 200 65009 ?

*> 10.10.1.0/24 10.60.0.1 0 100 i

*> 10.10.1.10/32 10.60.0.1 0 100 i

*> 10.10.1.12/32 10.60.0.1 0 100 200 i

*> 10.10.1.13/32 10.60.0.1 0 100 200 i

*> 10.20.1.0/24 10.60.0.1 0 100 i

*> 10.20.1.10/32 10.60.0.1 0 100 i

*> 10.20.1.12/32 10.60.0.1 0 100 200 i

*> 10.20.1.13/32 10.60.0.1 0 100 200 i

*> 10.60.0.0/24 0.0.0.0 0 32768 ?

*> 10.61.0.0/24 10.60.0.1 0 100 200 65009 ?

*> 172.16.0.0/24 0.0.0.0 0 32768 ?

*> 172.16.1.0/24 0.0.0.0 0 32768 ?

*> 172.16.2.0/24 10.60.0.1 0 100 200 65009 ?

*> 172.16.3.0/24 10.60.0.1 0 100 200 65009 ?EXT-2#show bgp ipv4 unicast | beg Network

Network Next Hop Metric LocPrf Weight Path

*> 0.0.0.0 10.61.0.1 0 200 i

*> 10.0.1.100/32 10.61.0.1 0 200 100 65008 ?

*> 10.0.1.200/32 0.0.0.0 0 32768 ?

*> 10.10.1.0/24 10.61.0.1 0 200 i

*> 10.10.1.10/32 10.61.0.1 0 200 100 i

*> 10.10.1.11/32 10.61.0.1 0 200 100 i

*> 10.10.1.12/32 10.61.0.1 0 200 i

*> 10.20.1.0/24 10.61.0.1 0 200 i

*> 10.20.1.10/32 10.61.0.1 0 200 100 i

*> 10.20.1.11/32 10.61.0.1 0 200 100 i

*> 10.20.1.12/32 10.61.0.1 0 200 i

*> 10.60.0.0/24 10.61.0.1 0 200 100 65008 ?

*> 10.61.0.0/24 0.0.0.0 0 32768 ?

*> 172.16.0.0/24 10.61.0.1 0 200 100 65008 ?

*> 172.16.1.0/24 10.61.0.1 0 200 100 65008 ?

*> 172.16.2.0/24 0.0.0.0 0 32768 ?

*> 172.16.3.0/24 0.0.0.0 0 32768 ?Theres two potential things to cleanup from looking at these BGP tables. We are getting all the /32 host routes and while we need these on the Border Leaf, we don't need them on the EXT routers because they all just point at the same next hop. Also we have a default route being advertised and also the routes from the opposing EXT router. For example EXT-1 is seeing 172.16.2.0/24 and 172.16.3.0/24 which were from EXT-2. This may not be desirable!

Lets tackle all 3 from the Fabric side:

DC1-LEAF2:

ip prefix-list to-ext-1 seq 10 permit 10.10.1.0/24 le 31

ip prefix-list to-ext-1 seq 15 permit 10.20.1.0/24 le 31

route-map to-ext-1 permit 10

match ip address prefix-list to-ext-1

router bgp 100

vrf external-shared

neighbor 10.60.0.2

address-family ipv4 unicast

route-map to-ext-1 outDC2-LEAF2:

ip prefix-list to-ext-2 seq 10 permit 10.10.1.0/24 le 31

ip prefix-list to-ext-2 seq 15 permit 10.20.1.0/24 le 31

route-map to-ext-2 permit 10

match ip address prefix-list to-ext-2

router bgp 200

vrf external-shared

neighbor 10.61.0.2

address-family ipv4 unicast

route-map to-ext-2 outWe now have much cleaner route tables:

EXT-1#show bgp ipv4 unicast | beg Network

Network Next Hop Metric LocPrf Weight Path

*> 10.0.1.100/32 0.0.0.0 0 32768 ?

*> 10.10.1.0/24 10.60.0.1 0 100 i

*> 10.20.1.0/24 10.60.0.1 0 100 i

*> 10.60.0.0/24 0.0.0.0 0 32768 ?

*> 172.16.0.0/24 0.0.0.0 0 32768 ?

*> 172.16.1.0/24 0.0.0.0 0 32768 ?

EXT-2#show bgp ipv4 unicast | beg Network

Network Next Hop Metric LocPrf Weight Path

*> 10.0.1.200/32 0.0.0.0 0 32768 ?

*> 10.10.1.0/24 10.61.0.1 0 200 i

*> 10.20.1.0/24 10.61.0.1 0 200 i

*> 10.61.0.0/24 0.0.0.0 0 32768 ?

*> 172.16.2.0/24 0.0.0.0 0 32768 ?

*> 172.16.3.0/24 0

.0.0.0 0 32768 ?And we still have inter-dc connectivity:

EXT-1#ping 10.10.1.12

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.10.1.12, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 16/21/40 msEXT-2#ping 10.10.1.10

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.10.1.10, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 16/34/80 msThat's it for now. We have successfully implemented a dual DC VXLAN fabric with multiple Tenant VRFs that allow communications between them. Then we have provided the fabric with external connectivity.

4 Comments

Sam · 29th October 2024 at 9:38 pm

Thank You. Simply really good article and its easy to understand.

Thanks-

Nick Carlton · 9th November 2024 at 10:52 pm

Thanks Sam!

Charles · 4th November 2024 at 4:41 pm

Hey Nick, thanks for the information. Im currently working on a lab for external connectivity in a VXLAN multisite eVPN. Right now everything works fine except for the fact that I have asymmetric traffic for endpoints in Site 2. This case is 2 DCs connected externally to the branches for HA. Any pointers to either enable host based routing or configure a preferred site for all routing?

Nick Carlton · 9th November 2024 at 10:52 pm

Hi Charles, when you say asymmetric, what is the traffic path you are seeing and from where? Host based routing can be achieved by not filtering the incoming/outgoing routes from the fabric as all the /32s should be sent by default due to the VRF leak. However, unless the external router is dual homed between the DCs (unlikely in the real world) then host based routing may not have any impact on traffic flow as it’s all going to be pointing to the same place. On preferred routing, AS Path should take care of this for you. A route locally learned in DC2 should be preferred in DC2 over a route learnt in DC1 and then advertised over the DCI. Unless your routes are coming in with other attributes that are skewing the BGP selection process.

Hope this helps!

Thanks

Nick