So, now we have external connectivity working, but there is a certain scenario where it breaks.

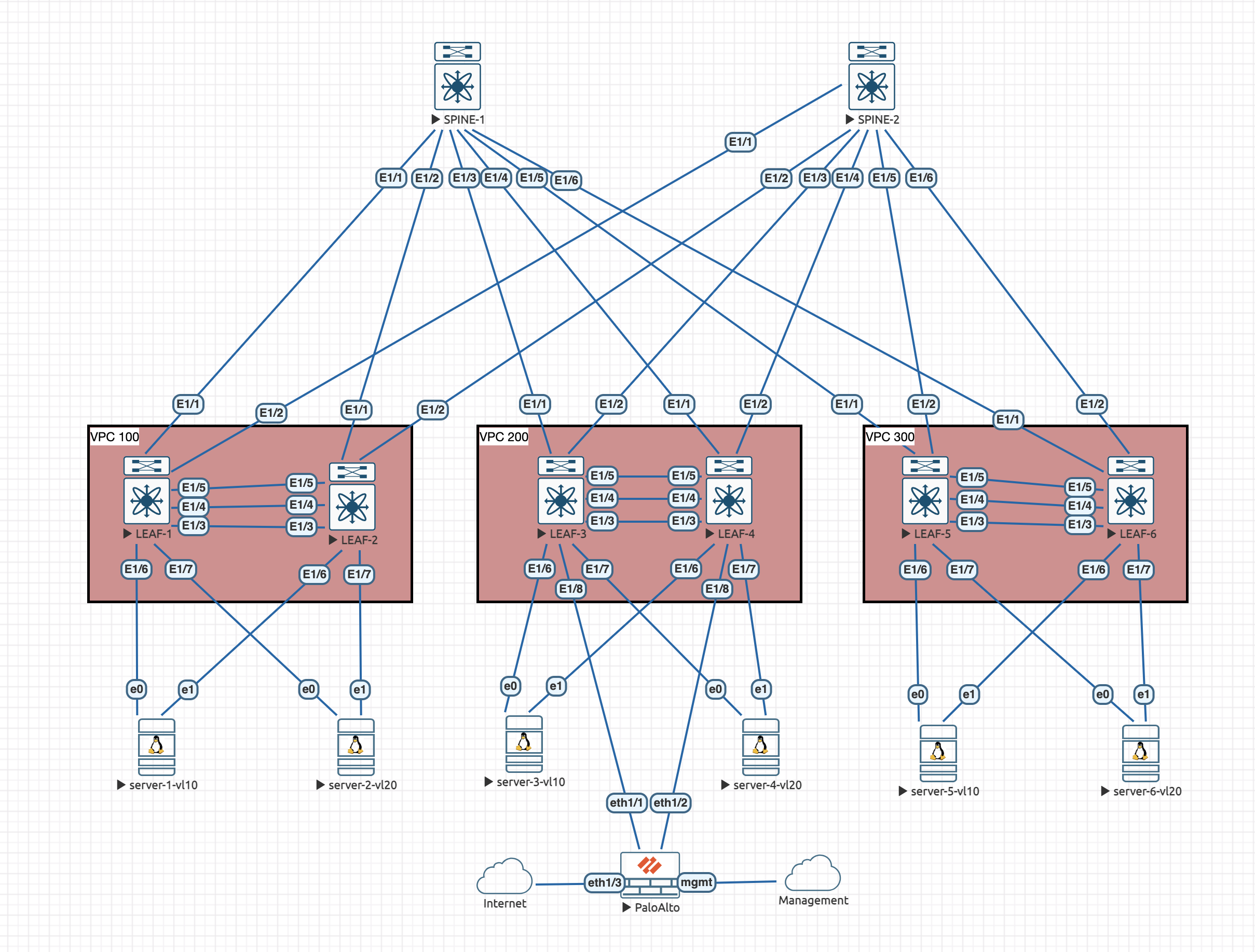

Lets take a look at the topology:

The issue

If we were on either server-3-vl10 or server-4-vl20 and the LACP hashing decided to forward traffic towards LEAF-3, what would happen if the connection between LEAF-3 and the Firewall was down?

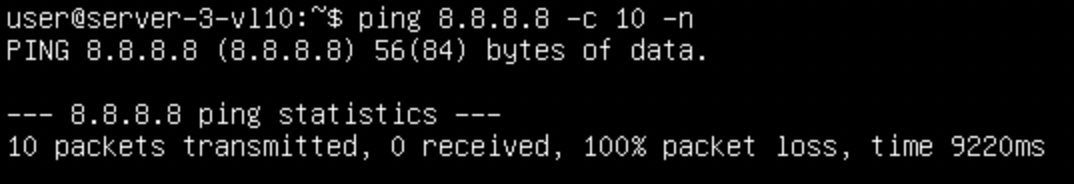

Well, this:

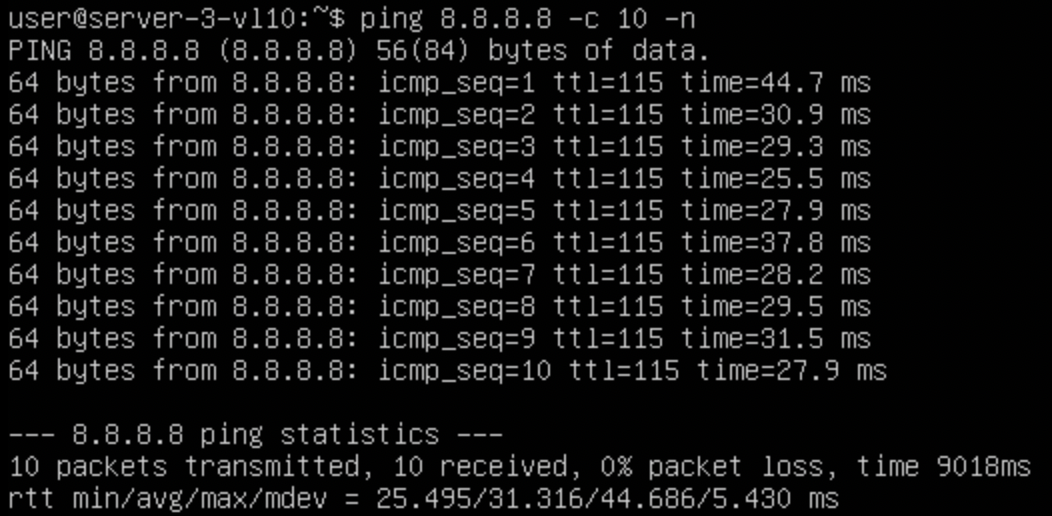

Any traffic trying to go outside of the fabric just fails. With us having LACP, some IPs will likely work, because of the hashing being done, some of the traffic flows will go to LEAF-4 which is in full operation, we can see that by trying another IP and seeing if its hashed differently:

Interesting! But its still bust.

The reason why this has happened, is that the BGP peering from LEAF-3 to the Firewall has gone down:

leaf-3# show bgp vrf external ipv4 unicast summary

BGP summary information for VRF external, address family IPv4 Unicast

BGP router identifier 10.32.60.1, local AS number 64500

BGP table version is 35, IPv4 Unicast config peers 1, capable peers 0

5 network entries and 23 paths using 980 bytes of memory

BGP attribute entries [21/7560], BGP AS path entries [1/6]

BGP community entries [0/0], BGP clusterlist entries [10/40]

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd

10.32.60.3 4 64520 0 0 0 0 0 00:08:56 Idle This is interesting, as surely this IP address is still reachable? We have the vPC peer link still connected and this is a peering from an SVI:

leaf-3# ping 10.32.60.3 vrf external count 10

PING 10.32.60.3 (10.32.60.3): 56 data bytes

64 bytes from 10.32.60.3: icmp_seq=0 ttl=62 time=22.881 ms

64 bytes from 10.32.60.3: icmp_seq=1 ttl=62 time=5.915 ms

64 bytes from 10.32.60.3: icmp_seq=2 ttl=62 time=5.303 ms

64 bytes from 10.32.60.3: icmp_seq=3 ttl=62 time=4.965 ms

64 bytes from 10.32.60.3: icmp_seq=4 ttl=62 time=6.639 ms

64 bytes from 10.32.60.3: icmp_seq=5 ttl=62 time=5.1 ms

64 bytes from 10.32.60.3: icmp_seq=6 ttl=62 time=6.027 ms

64 bytes from 10.32.60.3: icmp_seq=7 ttl=62 time=6.55 ms

64 bytes from 10.32.60.3: icmp_seq=8 ttl=62 time=6.874 ms

64 bytes from 10.32.60.3: icmp_seq=9 ttl=62 time=6.839 ms

--- 10.32.60.3 ping statistics ---

10 packets transmitted, 10 packets received, 0.00% packet loss

round-trip min/avg/max = 4.965/7.709/22.881 msSo it pings, but we cant establish a BGP session? Before we look at the fix, lets take a look at why we have lost connectivity from end clients.

Looking at the routing table on LEAF-3 it does have a default route still:

LEAF-3# show ip route 0.0.0.0/0 vrf all

IP Route Table for VRF "OVERLAY-TENANT1"

0.0.0.0/0, ubest/mbest: 1/0

*via 10.0.1.4%default, [200/0], 00:07:31, bgp-64500, internal, tag 64520, segid: 100999 tunnelid: 0xa000104 encap: VXLAN

IP Route Table for VRF "OVERLAY-TENANT2"

0.0.0.0/0, ubest/mbest: 1/0

*via 10.0.1.4%default, [200/0], 00:07:31, bgp-64500, internal, tag 64520, segid: 100999 (Asymmetric) tunnelid: 0xa000104 encap: VXLAN

IP Route Table for VRF "external"

0.0.0.0/0, ubest/mbest: 1/0

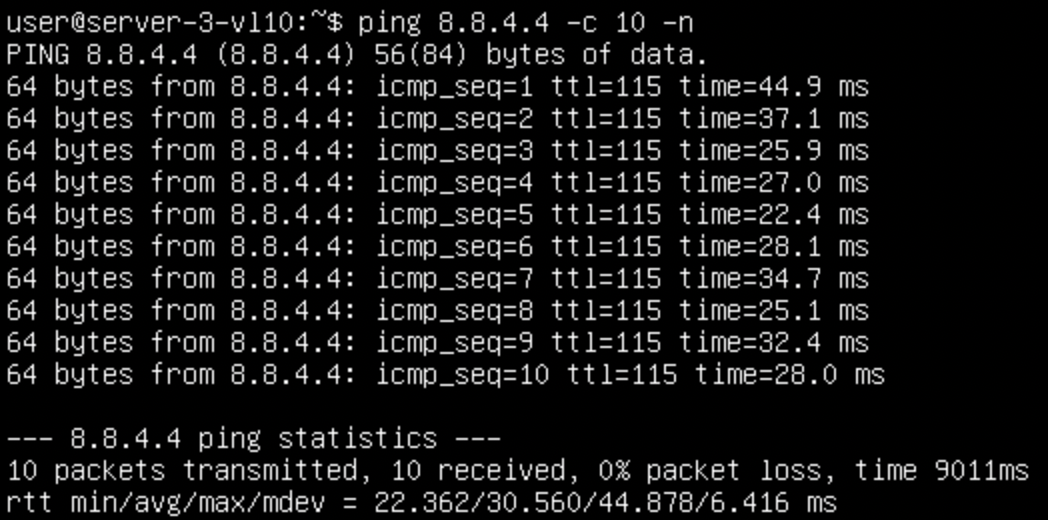

*via 10.0.1.4%default, [200/0], 00:07:31, bgp-64500, internal, tag 64520, segid: 100999 (Asymmetric) tunnelid: 0xa000104 encap: VXLANHowever, its showing the next hop as being 10.0.1.4 which is LEAF-4 but its sending it over the underlay. This wont work because if we actually look at the packet its sourced from the Anyast VTEP IP address that both leaves share:

So, in order to resolve this, there is a specific vPC configuration we need to enable. By default, the eBGP TTL is set at 1. This is because BGP expects you to be directly connected, you can change this. However, because the BGP packets are now going over the peer link to LEAF-4, its decrementing the TTL to 0 and therefore is unable to forward the packet any further.

leaf-3# show ip arp 10.32.60.3 vrf external | begin Address

Address Age MAC Address Interface Flags

10.32.60.3 00:17:55 badb.eefb.ad30 Vlan3260

leaf-3# show mac address-table address badb.eefb.ad30 | begin VLAN

VLAN MAC Address Type age Secure NTFY Ports

---------+-----------------+--------+---------+------+----+------------------

3260 badb.eefb.ad30 dynamic NA F F vPC Peer-LinkWe could get around this by bumping up the eBGP TTL on the Firewall and Leaf, but thats not a great idea. There is a vPC enhancement that fixes this for us and allows us to form routing peerings using the vPC peer link!

Resolution

The configuration is completed under the vPC domain on both members:

vpc domain 200

layer3 peer-routerPretty quickly, we should see the peering come back up:

leaf-3# show bgp vrf external ipv4 unicast summary

BGP summary information for VRF external, address family IPv4 Unicast

BGP router identifier 10.32.60.1, local AS number 64500

BGP table version is 37, IPv4 Unicast config peers 1, capable peers 1

5 network entries and 24 paths using 1076 bytes of memory

BGP attribute entries [22/7920], BGP AS path entries [1/6]

BGP community entries [0/0], BGP clusterlist entries [10/40]

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd

10.32.60.3 4 64520 12 21 37 0 0 00:03:37 1 Now that peering is up, lets check the routing table for 0.0.0.0/0 now on LEAF-3:

leaf-3# show ip route 0.0.0.0/0 vrf all

IP Route Table for VRF "OVERLAY-TENANT1"

0.0.0.0/0, ubest/mbest: 1/0

*via 10.32.60.3%external, [20/0], 00:03:58, bgp-64500, external, tag 64520

IP Route Table for VRF "OVERLAY-TENANT2"

0.0.0.0/0, ubest/mbest: 1/0

*via 10.32.60.3%external, [20/0], 00:03:58, bgp-64500, external, tag 64520

IP Route Table for VRF "external"

0.0.0.0/0, ubest/mbest: 1/0

*via 10.32.60.3, [20/0], 00:03:58, bgp-64500, external, tag 64520We now see the 'real' next-hop for the default route which is the firewall. Lets see if that has helped our server out:

That looks to have done it. We have connectivity back, albeit this is over the peer link. There isn't really any other way of getting this traffic to the Firewall in this failure scenario.

And thats it, we now have a more resilient fabric. There is another topic on resilience which is next up. Which is related specifically to vPC.

0 Comments