In the last part, we setup the vPC domains on the leaf switches and all the interfaces down to our vPC member servers. In this part, we are going to look at the underlay setup. There aren't many differences from a traditional VXLAN deployment, but lets dive in!

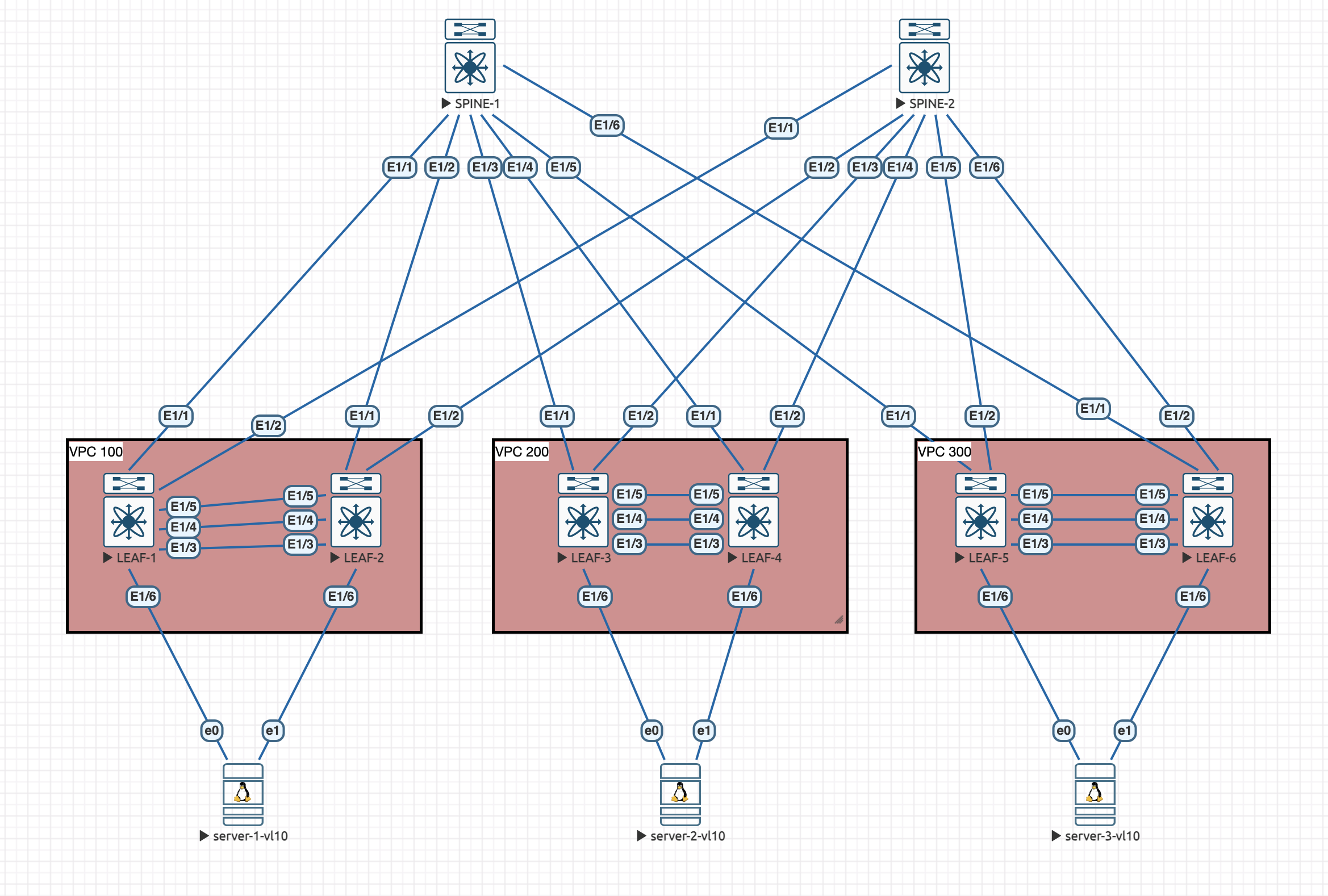

Here is the topology we will be working with:

Firstly we should enable all the features we need for the configuration on all switches:

feature ospf

feature bgp

feature pim

feature fabric forwarding

feature interface-vlan

feature vn-segment-vlan-based

feature nv overlayEach of the switches need to have a lookback0 interface configured with IP addresses:

spine-1: 10.0.0.1/32

spine-2: 10.0.0.2/32

leaf-1: 10.0.0.3/32

leaf-2: 10.0.0.4/32

leaf-3: 10.0.0.5/32

leaf-4: 10.0.0.6/32

leaf-5: 10.0.0.7/32

leaf-6: 10.0.0.8/32

These loopbacks are for routing protocols, and will not be used as the VTEP source as its best practice to keep these separate.

Routing Configuration

All switches have a basic OSPF configuration:

router ospf UNDERLAY

log-adjacency-changesThen each of the loopback0 interfaces are configured for OSPF in area 0.0.0.0:

interface Loopback0

ip router ospf UNDERLAY area 0.0.0.0Interface Configuration

The Spine interfaces towards the the leaves are configured as layer 3 ports using the loopback interface:

interface Ethernet1/1-6

no switchport

no shutdown

mtu 9216

medium p2p

ip unnumbered loopback0

ip router ospf UNDERLAY area 0.0.0.0The Leaf interfaces towards the the spines are also configured as layer 3 ports using the loopback interface:

interface Ethernet1/1-2

no switchport

no shutdown

mtu 9216

medium p2p

ip unnumbered loopback0

ip router ospf UNDERLAY area 0.0.0.0Using ip unnumbered is really powerful as it means we don't need to think about or worry about a load of /31 transit IPs between all the devices.

We should now have basic connectivity within the topology, lets check the routing table on spine-1 for the OSPF routes:

SPINE-1# show ip route ospf-UNDERLAY

IP Route Table for VRF "default"

10.0.0.2/32, ubest/mbest: 6/0

*via 10.0.0.3, Eth1/1, [110/81], 00:00:32, ospf-UNDERLAY, intra

*via 10.0.0.4, Eth1/2, [110/81], 00:00:32, ospf-UNDERLAY, intra

*via 10.0.0.5, Eth1/3, [110/81], 00:00:30, ospf-UNDERLAY, intra

*via 10.0.0.6, Eth1/4, [110/81], 00:00:29, ospf-UNDERLAY, intra

*via 10.0.0.7, Eth1/5, [110/81], 00:00:32, ospf-UNDERLAY, intra

*via 10.0.0.8, Eth1/6, [110/81], 0.000000, ospf-UNDERLAY, intra

10.0.0.3/32, ubest/mbest: 1/0

*via 10.0.0.3, Eth1/1, [110/41], 00:00:32, ospf-UNDERLAY, intra

10.0.0.4/32, ubest/mbest: 1/0

*via 10.0.0.4, Eth1/2, [110/41], 00:00:32, ospf-UNDERLAY, intra

10.0.0.5/32, ubest/mbest: 1/0

*via 10.0.0.5, Eth1/3, [110/41], 00:00:30, ospf-UNDERLAY, intra

10.0.0.6/32, ubest/mbest: 1/0

*via 10.0.0.6, Eth1/4, [110/41], 00:00:29, ospf-UNDERLAY, intra

10.0.0.7/32, ubest/mbest: 1/0

*via 10.0.0.7, Eth1/5, [110/41], 00:00:32, ospf-UNDERLAY, intra

10.0.0.8/32, ubest/mbest: 1/0

*via 10.0.0.8, Eth1/6, [110/41], 0.000000, ospf-UNDERLAY, intraThe above shows that we are seeing all the correct routes in the underlay. Now we can move onto the Multicast setup.

Multicast Configuration

PIM is configured for the VXLAN Flood and Learn mechanism and in this topology, we will need to configure the spines to be RPs.

The configuration on the spines should be:

ip pim rp-address 10.0.0.99 group-list 224.0.0.0/4

ip pim ssm range 232.0.0.0/8

ip pim anycast-rp 10.0.0.99 10.0.0.1

ip pim anycast-rp 10.0.0.99 10.0.0.2

interface loopback1

ip address 10.0.0.99/32

ip router ospf UNDERLAY area 0.0.0.0

ip pim sparse-mode

interface loopback0

ip pim sparse-mode

int Ethernet1/1-6

ip pim sparse-modeThe configuration on the leaves is a little less involved:

ip pim rp-address 10.0.0.99 group-list 224.0.0.0/4

ip pim ssm range 232.0.0.0/8

interface loopback0

ip pim sparse-mode

interface Ethernet1/1-2

ip pim sparse-modeThis configurations makes the spines both RPs with the address 10.0.0.99 and then configures the leaves to point towards them. Making the setup and topology redundant.

We can validate the Multicast setup with the following command on the spines:

SPINE-1# show ip pim rp

PIM RP Status Information for VRF "default"

BSR disabled

Auto-RP disabled

BSR RP Candidate policy: None

BSR RP policy: None

Auto-RP Announce policy: None

Auto-RP Discovery policy: None

Anycast-RP 10.0.0.99 members:

10.0.0.1* 10.0.0.2

RP: 10.0.0.99*, (0),

uptime: 00:00:51 priority: 255,

RP-source: (local),

group ranges:

224.0.0.0/4 We can see from the above output that the multicast setup is working and both RPs can see each other.

Now we can look at the final part of the underlay setup with the NVE setup.

NVE Configuration

NVE (Network Virtual Endpoint) is a logical interface where the encapsulation and de-encapsulation happens. This configuration is for the leaves only as they are the ones doing the encapsulation and de-encapsulation. It is also called the VTEP which stands for Virtual Tunnel Endpoint.

This is also where we begin to see some vPC specific changes. Firstly, we need to create a new loopback1 interface on all the leaves:

leaf-1: 10.0.1.1/32

leaf-2: 10.0.1.2/32

leaf-3: 10.0.1.3/32

leaf-4: 10.0.1.4/32

leaf-5: 10.0.1.5/32

leaf-6: 10.0.1.6/32

And then each vPC pair needs a secondary IP address on the loopback1 interface which is common:

vpc domain 100: 10.0.1.101/32

vpc domain 200: 10.0.1.102/32

vpc domain 300: 10.0.1.103/32

For example on LEAF-1:

interface loopback1

ip address 10.0.1.1/32

ip address 10.0.1.101/32 secondary

ip router ospf UNDERLAY area 0.0.0.0

ip pim sparse-modeand LEAF-2:

interface loopback1

ip address 10.0.1.2/32

ip address 10.0.1.101/32 secondary

ip pim sparse-modeThe reason we have to do this, is because of the way VXLAN routes are advertised, the NVE IP address is used as the next hop. In a non-vPC environment, this is the loopback of the single switch. If we did the same with vPCs configured, without a "shared" VTEP IP address, the switches will act almost independently sending their own BGP routes with their own VTEP IP, with a shared IP, the response can be serviced by either device if one were to go offline for example. This just relies on the underlay routing in OSPF:

SPINE-1# show ip route 10.0.1.64/26 longer-prefixes

IP Route Table for VRF "default"

10.0.1.101/32, ubest/mbest: 2/0

*via 10.0.0.3, Eth1/1, [110/41], 00:06:30, ospf-UNDERLAY, intra

*via 10.0.0.4, Eth1/2, [110/41], 00:06:30, ospf-UNDERLAY, intra

10.0.1.102/32, ubest/mbest: 2/0

*via 10.0.0.5, Eth1/3, [110/41], 00:06:32, ospf-UNDERLAY, intra

*via 10.0.0.6, Eth1/4, [110/41], 00:06:33, ospf-UNDERLAY, intra

10.0.1.103/32, ubest/mbest: 2/0

*via 10.0.0.7, Eth1/5, [110/41], 00:06:34, ospf-UNDERLAY, intra

*via 10.0.0.8, Eth1/6, [110/41], 00:06:36, ospf-UNDERLAY, intraAs seen above, the path to each vPC shared loopback IP is redundant from a routing perspective. We should also experience some load balancing here with ECMP. This is also commonly called Anycast VTEP.

Now we can configure the NVE interface.

This is the base configuration for the nve1 interface:

interface nve1

no shutdown

host-reachability protocol bgp

advertise virtual-rmac

source-interface loopback1We also see an extra command here than normal. advertise virtual-rmac is used to advertise the vPC virtual mac address as the VTEP instead of the hardware mac address of the device. This is required to prevent some blackhole scenarios. We will see this in action later.

We can check the status of the nve interface:

LEAF-1# show interface nve 1

nve1 is up

admin state is up, Hardware: NVE

MTU 9216 bytes

Encapsulation VXLAN

Auto-mdix is turned off

RX

ucast: 0 pkts, 0 bytes - mcast: 0 pkts, 0 bytes

TX

ucast: 0 pkts, 0 bytes - mcast: 0 pkts, 0 bytesThe above output shows the interface is in an UP state.

We are done with the underlay setup now, and we will move onto the overlay configuration in the next part!

3 Comments

yauheni · 18th August 2025 at 1:19 pm

Hi, Nick, thank you for the detailed setup. I would like to clarify a few questions about the vxlan fabric configuration. You described how to set up an external connection. But how to organize a DMZ zone if we use NGFW. Should we also use vrf external for the dnz zone, or is there another way to do this?

Nick Carlton · 25th October 2025 at 10:03 pm

Hey,

With DMZs, these are normally Layer 2 only from a fabric perspective and instead of having a L3 gateway within the Fabric, their Gateway would be on a Firewall. So you would create a standard L2VNI without anycast gateways and then configure a member port/VPC toward the firewall and ‘extend’ that L2VNI outside of the fabric and put a Gateway on the Firewall. This means that any traffic coming in or out of that network must pass via the Firewall.

If you just wanted segmentation and not firewall capabilities, you could put the DMZ in its own Tenant VRF without any leaking, but that would get quite complex.

Hope this helps.

Thanks

Nick

Benedict · 10th January 2026 at 3:57 am

Hi Nick, I am vey grateful to you, because within less than 3hours you have taught me so much about VXLAN, I very very grateful.